Arthur S. Davis, Yash Chhunchha National University 8/31/2012

With the expansion of the human population living on a planet with finite natural resources, the need for efficiency in all things is increasing every day. We are forced to comply with inarguable logic that compares the needs of humanity with available and realistic solutions. Green Computing, a more recent trend in computer science, promises to promote efficiency by analysis and implementation of "earth friendly" solutions to limit waste in the design, manufacturing and operation of computers. Green Computing can include anything from bicycle powered PDA's to giant server farms trying to squeeze out a few watts per node. In all of its forms, Green Computing uses efficiency as a tool to limit human impact on our environment.

One of the drawbacks of Green Computing is its lack of romanticism. Presenting a room full of businessmen with an environmentally conscious plan to improve the company is not always easy. We can garner interest by proving that Green Computing's drive towards efficiency equates to cost savings and increased profits. Using money as a lever, we can implement Green Computing as a solution to the "needs vs. resources" problem that is currently plaguing our planet.

One obvious solution is to attack energy usage at its source. Where we plug in makes a big difference and can greatly reduce the need for energy drawn from the grid. A.R. Kale and A. Muhtaroglu introduced their idea for "Green PG: A low cost, modular, pedal-powered 5-20 V parallel DC source for mobile computing applications" at the International Conference for Energy Aware Computing in 2010. A device is attached to an exercise machine or a bicycle that can power phones, lap tops or other electronic devices. By using Green PG, impact on the environment during operation is nearly eliminated.

Green PG source: Google images

The benefit in applying Green PG as an energy saving technology is mitigated by the costs of manufacturing and marketing the devices. The story behind every great product includes the business that must be produced to support it. Resources are mustered. A manufacturing site is chosen or built. Further engineering may be required to bring items beyond the prototype stage. The marketing team will need to choose the type and scope of media used for promotion. Packaging needs to be designed and manufactured. A wide focus is necessary for viewing each new, energy saving gadget. Any idea or machine that belongs in the field of green technology must be looked at as part of a grand process.

Gilligan's Island TV Show 1960's source: Google images

The process of harnessing "wasted" (Green PG) energy has been used since man built the first sailboat. Bike generators are old hat. A close parallel to Green PG occurred on the "Gilligan's Island" TV show in the 1960's. In the show, a college professor stranded on the island built a bike generator to power a radio in an attempt to be rescued. Unlike this example, we need solutions that create energy savings from the thin air of knowledge surrounding real engineering breakthroughs.

When we decide to solve a problem, it becomes easier to understand the source of the problem by separating it into manageable pieces. As Green Computing relates directly to power consumption and efficiency, it would be prudent to apply the dynamic power equation (DPE) for rating CMOS chips as a universal benchmark. The DPE can be written as P=aCV^2f, wherein power (P) is the amount of power or energy used; activity (a) is processing activity that directly employs the chip; capacitance(C) is the amount of power loss during switching; (V^2) is the voltage coming from the battery or wall socket (squared) and clock frequency (f) is how many steps the machine can do per second. The DPE can be used to define a single chip or an entire system. Besides the DPE, the key factors regarding computing performance and CMOS technology can be illustrated by the acronym, SLOW [5]:

A number of alternatives will be covered that address these concerns through different methods of combining easy to find hardware with new architecture and software solutions. Overall the Green Computing mantra is adhered to by promoting efficiency in design as an effective move towards conservation of resources. The main benefit of conservation is to preserve resources for later use. In the case of cost savings in computer systems, these "preserved resources" equate to monetary profit.

According to members at the 2010 IEEE/ACM International Conference on Green Computing and Communications, "… by the year 2020, computers might account for 50% of the total US electricity consumption" [6]. With this in mind, it's easy to see how small power savings in personal computers can make a big difference in the global economy. Likewise, large corporate networks or server farms can benefit from small improvements on a node by node basis. It is this "think small to reap large rewards" philosophy that empowers some of the best work in Green Computing.

As internet growth progresses worldwide, large scale server farms are taking advantage of a diverse group of resources located throughout the country. Availability of less expensive hydroelectric power or existing fiber optic infrastructure can send a behemoth like Google into the hinterlands, looking for a good deal. By using this strategy Google will "save millions of dollars on energy costs" each year [6]. Google further optimizes their energy savings by carefully designing floor plans, running their gear at higher temperatures and using outside air or re-usable water to increase cooling efficiency. Another trick Google uses is by tailoring their machinery to perform the specific tasks of internet searches, email or caching apps like Picasa [6]. Google provides a great example that energy savings equals cost savings and higher profits.

This is all good news for Green Computing but some of these methods won't transfer well when applied to a multi-use server environment or personal computers. The diverse array of uses creates a list of problems that need to be addressed before we can widely apply green technology in these areas. Handling emails or storing websites uses different parts of a computer node than crunching numbers for engineering or finance. Additionally, we may not be able to move our families and businesses to prime locations. In a June 2012 report, the US Bureau of Labor Statistics states: "For the past five years, electricity prices in the Los Angeles area have been consistently above the national average and ranged from at least 30.0 percent to over 75.0 percent higher" [8]. With these eclectic requirements, it may be best to tighten the focus and apply best practices to individual nodes.

One example wherein small improvements can be applied on a large scale is diskless clusters. The type of computer systems used in server farms or large-scale networks can use thousands of individual compute nodes. By replacing disk-drives with their hardware equivalent, the costs of building and maintaining the sole moving parts in a node are nearly eliminated. In this case, savings of a few watts per node can total tens of thousands of dollars per year [5].

A recent presentation to the International Conference on Network and Service Management promotes diskless server clusters as an alternative to current disk-drive technology [5]. Diskless clusters of 32, 64 and 128 nodes were set up to run various High Performance LINPACK (HPL) benchmarks against diskfull clusters of like sizes. The HPL benchmarks consist of FORTRAN subroutines that solve linear equations and linear least squares problems. The size of the clusters and the LINPACK benchmark ensured a fair test of performance and scalability.

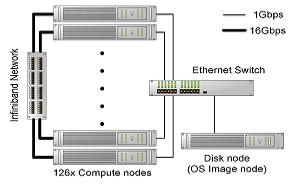

Getting rid of the disk drives solves the problems of reliability and the environmental impact of replacing a whole sub-assembly when one miniature part causes a malfunction. However, it creates another problem in how the Operating System (OS) will be implemented. Normally, the OS is stored on a disk-drive when the compute node is turned off during maintenance or malfunction. The diskless solution for this outsources the OS on a 1Gbps bus to one diskfull compute node that handles OS image boot-up and remote access for the entire cluster (fig. 1a).

Fig. 1a

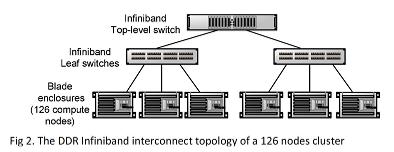

The clusters are served by an Infiniband Host Channel Adapter (HCA) supporting 4x Double Data Rate (DDR) connections with the speed of 16Gbps, and 1Gbps. In the case of expansion, the HCA uses a 144-port Qlogic Infiniband switch (fig. 1b).

Fig. 1b

The change to diskless architecture provides relief from power consumption and the impact caused by the hardware maintenance cycle of the disk-drives. Power savings of at least 3 watts per node can amount to tens of thousands of dollars per year for a large scale installation. Conversely, diskless clusters have some problems. In the experiment, diskless clusters would freeze during disk swapping exercises. Also, the disk node can be a single source of failure. These weak points can be bolstered by increased RAM and more advanced network storage technologies. Altogether, diskless architecture offers advantages that can be used to increase computing power while saving money.

One of the unwitting cornerstones of Green Computing has been the development of cell phones and embedded systems. The search for smaller size and more computing power goes hand in hand with the tenets of green technology. As mobile gadgets get smaller, so do their batteries and available power. Searching for supplies in electronics catalogs, we will notice an increasing number of chips that run at 3.6 volts (v). This is because lithium-polymer (LiPo) batteries have replaced 9v alkaline as the power source of choice for mobile devices. The old 5v standard for CMOS chips can't be supported by LiPo's 3.7v power rating. The advantage is obvious. The same computation run on our home PC and an embedded device can take almost 40% less power on the embedded device.

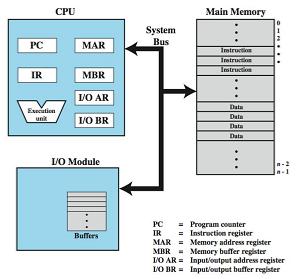

The bad news about lowered voltages is that we have reached a plateau in power saving and can go no further without tremendous advances in hardware and materials [5]. The tipping points are clock frequency and load/store overhead. The need to transfer information increases as we cram more components onto a chip. We can increase clock frequency to meet the need for higher speeds. The obvious drawback is when we increase the amount of computations, more power is used (DPE). Also, with each clock tick, there is some power leakage (C) when the switch or gate is between 0 and 1. The gap between operating voltage (V) and power dissipation (C) decreases with lower voltages and increased clock frequency (f). More importantly, any CPU activity (a) is limited by the speed of instructions and data between the processor and the memory or I/O module (fig.2a). Increasing bus speed or size comes at a cost of increased processing power. This standoff is known as a Von Neumann Bottleneck.

Von Neumann Architecture System bus = Bottleneck Fig. 2a

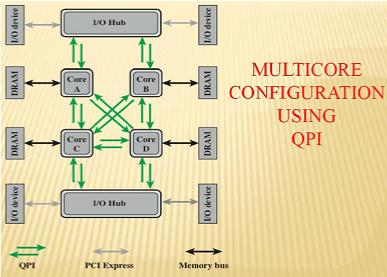

Arguably, multi-core processing comes under this limitation and multi-core architecture reflects this in the way the cores in a node are connected (fig.2b).

Direct pairwise connections, layered protocol and packetized data transfer hampered by load/store costs Fig. 2b

A further example of load/store costs is the way a chip's increasing complexity and smaller size limits available off-chip connections. CMOS chips will hit the wall when there's no more room for a solder pin (Fig. 2c).

Printed circuit board - big square CMOS (complementary metal-oxide-semiconductor) chip has 128 solder pins Fig. 2c

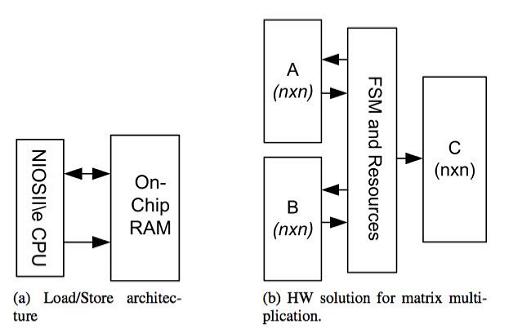

Non-instruction, fetch-based architecture offers a solution to the load/store problem. In a 2010 experiment, IEEE/ACM members, Abdelghani Renbi, Lennart Lindh and Jerker Delsing, have compared the Altera NIOS Field Programmable Gate Array (FPGA) using high-level architecture against hardware (HW) designs for matrix multiplication. In an earlier experiment comparing Von Neumann architecture against a non-instruction fetch-based system using Field Programmable Gate Arrays (FPGA), the software system typical of a home computer used 100 times more energy [6]. Delsing et al. ran a hardware to hardware experiment which isolated the differences between load/store architecture that mimicked Von Neumann, a load/store with a hardware multiplier and a system purpose built to handle test calculations and processing.

All the systems performed the operations at the same speed but load/store systems still came up short in tests for power consumption and heat dissipation. The pipelined (Von Neumann) architecture combined with HW multiplier lacked energy efficiency when compared to non-instruction fetch-based designs. This indicates: heat loss from capacitance (C) and additional cooling needs can be added to latency associated with the Von Neumann bottleneck.

The non-instruction fetch-based solution solves the problems of latency and energy loss by using FPGA's and a configware solution to tailor hardware to accommodate a given process. Programming an algorithm and its ancillary calculations and processing into FPGA eliminates the travel time needed to store and fetch instructions and data. This solution could be effective for data analytics such as neural or DNA analysis or marketing. In this case, highly complicated processes will benefit if repeated enough times. However, this may not be effective or useful on an all-purpose computer in a home or business.

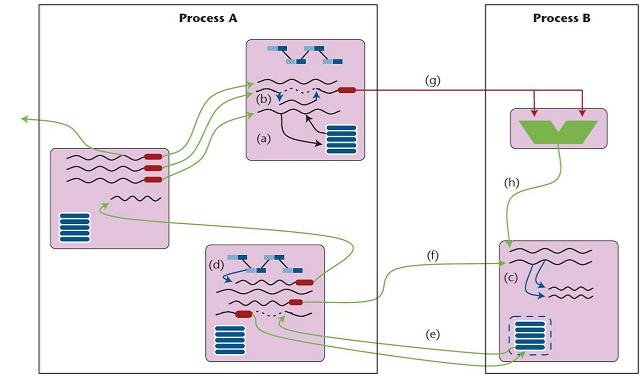

In "Advanced Architecture and Execution Models to Support Green Computing" a team of researchers from Sandia National Laboratories and Louisiana State University outline a method for truly parallel computing. Their version, called ParelleX, replaces traditional von Neumann architecture with an innovative mix of architecture and algorithms. Using efficiency as the goal, the ParelleX method employs parallel processes, first-class threads, local control objects (LCOs), and parcels. Unfamiliar as these methods seem, the way they are used in the computer infrastructure may also be hard to understand. This is the nature of innovation. As undergraduate students, we learn that parallel computing is "implied" when, in reality the process is simulated by filling a room full of computers or using multi-core architecture. Real parallel computing is a paradigm that requires a different mindset to comprehend the various outside the box changes in computer design and implementation. The construct is used in such a way that manages asynchrony and also allows flow control through continuations, which migrate across the abstract computation and the physical distributed machine [5].

We can begin to understand the ParelleX architecture by looking into it piece by piece (Fig.4). LCO's enable synchronization by using common forms such as mutexes, semaphores, barriers and more powerful forms such as dataflow, futures and producer-consumer. LCO's can also employ threads. Threads are bits of executing operations that share intermediate values and local control. First-class threads can be accessed globally and other threads can manipulate them if necessary. Adding the ability for the LCO to "migrate" through the machinery and software to influence flow control employs parallelism superior to typical concurrent programming. The parallel processes differ from the MPI standards for message-passing in the way they can use and share one or more nodes with multiple cores. Parcels are a form of active messages that enable message driven computation which moves work to remote sites to process local data. The parallel processes are ephemeral. They can be created or destroyed and span multiple overlapping nodes in the global address space. This seemingly nebulous design package is the key to simultaneous processing. Keeping all the parts busy and in close communication promotes the efficiency and cost savings in Green Computing.

Fig. 4 ParelleX architecture

Clearly, a prime disadvantage of Green Computing is its innovative nature. Our survival instincts illicit suspicion when we are faced with solutions which are unfamiliar. Occasionally, a solution is proposed when a problem does not seem to exist. We need to learn that resistance to change is an animal instinct and a systemic process of innovation, peer-review, development and implementation will prevail. Ultimately, if scientific proof is not enough, we can use cost savings and increased profits to encourage innovation in Green Computing.

The solutions provided herein may or may not be implemented on a grand scale but they have been thoroughly tested and exhibit enthusiasm and confidence that Green Computing can play a key role in environmental resource management. The non-instruction fetch-based solution solves the load/store overhead problem by using FPGA's and a configware solution that tailors hardware to accommodate a given process. The drawback here lies in predicting the process. There is still a lot of work necessary to produce a multi-use CPU with this technology. The ParelleX architecture offers a way to solve the problem through architecture and algorithms while retaining CMOS technology that has been the foundation of digital computing. The diskless clusters covered herein are made from off the shelf products and this hardware/architecture combination could be in use today.

Green Computing is a worthy endeavor. The search for more efficient computing machinery will continue as a white knight of cost savings and save the world madness. The harbinger of global warming and economic catastrophe will have us all looking in every nook, cranny and sock drawer for the next "best solution" to cost savings and environmental awareness. The key issue is that improvements will be made increasingly in an inward direction, through advances in smart computing. Innovative architecture changes and their associated algorithms will provide the solutions we need to empower Green Computing. The drawbacks inherent in CMOS, disk-drives and Van Neumann architecture are becoming more obvious every day. By deductive reasoning, we can conclude: Since hardware options are decreasing, the solution lies in the way systems are designed and implemented. The key elements shown here: diskless clusters, ParalleX architecture and non-instruction fetch-based design, are all promising solutions. Each of them utilizes currently available hardware and unique architecture and programming. By employing architecture as a tool, we can cut costs and save money through Green Computing.

References

[1]Google, "Data Centers," 20 August 2012. Retrieved: http://www.google.com/about/datacenters/index.html#

[2]Kale, A.R.; Muhtaroglu, A.; , "Green PG: A low cost, modular, pedal-powered 5-20 V parallel DC source for mobile computing applications," Energy Aware Computing (ICEAC), 2010 International Conference on, vol., no., pp.1-2, 16-18 Dec. 2010, doi: 10.1109/ICEAC.2010.5702306 Retrieved: http://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=5702306&isnumber=5702274

[3]Koomey, John G.; "Worldwide Electricity Used in Data Centers," IOP Publishing , 23 September 2008, doi:10.1088/1748-9326/3/3/034008 Retrieved: http://107.22.214.237/libraries/about-extreme/1748-9326_3_3_034008.pdf

[4]Miller, Rich; "Report: Google uses about 900,000 Servers," Data Center Knowledge, 1 August 2011 Retrieved: http://www.datacenterknowledge.com/archives/2011/08/01/report-google-uses-about-900000-servers/

[5]Murphy, R.; Sterling, T.; Dekate, C.; , "Advanced Architectures and Execution Models to Support Green Computing," Computing in Science & Engineering , vol.12, no.6, pp.38-47, Nov.-Dec. 2010, doi: 10.1109/MCSE.2010.124 Retrieved: IEEE Library

[6]Renbi, A.; Lindh, L.; Delsing, J.; , "Non-Instruction Fetch-Based Architecture Reduces Almost 100 Percent of the Dynamic Power and Energy," Green Computing and Communications (GreenCom), 2010 IEEE/ACM Int'l Conference on Cyber, Physical and Social Computing (CPSCom) , vol., no., pp.418-424, 18-20 Dec. 2010, doi: 10.1109/GreenCom-CPSCom.2010.94 Retrieved: IEEE Library

[7]Salah, K.; Al-Shaikh, R.; Sindi, M.; , "Towards green computing using diskless high performance clusters," Network and Service Management (CNSM), 2011 7th International Conference on , vol., no., pp.1-4, 24-28 Oct. 2011 Retrieved: IEEE Library

[8]U.S. Bureau of Labor Statistics West Information Office; "Average Energy Prices in the Los Angeles Area" News Release, June 24, 2012, Retrieved: http://www.bls.gov/ro9/cpilosa_energy.htm

The notion of computers invading our privacy is a hot topic in today's news. The internet is littered with warnings about the NSA digging into our lives and the IRS has acted on the data in a most alarming way. The U.S.A Patriot Act (2001), in an attempt to fight terrorism on U.S. soil has weakened the Fourth amendment of the U.S. Constitution which protects people from "unreasonable searches and seizures" and requires a warrant backed by "…[o]ath or affirmation…" that lists particular "…places to be searched, and…things to be seized" (Bill of Rights, 1791). The NSA's methods, leaked by former employee Edward Snowden and posted on Wikileaks give a clear view of the type and scope of information our government is currently collecting More...

The evolution of computers that replaced mechanical and electrical relays with vacuum tubes, then transistors and eventually finely sculpted silicon wafers is about to see a new transformation. Using a material so common that it is part of almost everything we see, touch, eat or breathe, scientists and engineers are busy making products that will warp our vision of the future. Carbon is as old as the hills and in its newest form it could quickly eclipse previous advancements in computer processing and storage. Computer circuits will soon become smaller, faster and more resilient in extreme environments because they will be made from one of the strongest materials in the world. More...

Of all the mathematical theorems, postulates and conjectures, the Fibonacci sequence stands out as the most useful modern tool devised by the ancient mathematicians. Its simplicity makes it accessible to the student or layman. Its application in finance or search algorithms gives it credence among exceedingly complex calculations. It is also a romantic notion, easily repeated like a popular song with a great history. Like Pythagoras or Euclid, Leonardo "Fibonacci" di Pisa brought complex mathematics into popular knowledge and he has been repaid by the enormous use of his product. More...

For over two thousand years school reform has produced positive results while failing to provide a simple and effective program to encourage innovative thinkers. It is easy to craft a solution, limited by our grasp of the facts and their application, which can become ineffective when viewed from another perspective or vetted through trial and error. Today, the biggest problem is that we spend all of our time on the acquisition and review of knowledge, and the goal of innovation has been suspended. Effective class time utilization offers a solution by providing a small space in the curriculum for new ideas. Allowing inspiration to enter the classroom is all that is needed to extract the nectar of innovation from the flower of knowledge.More...